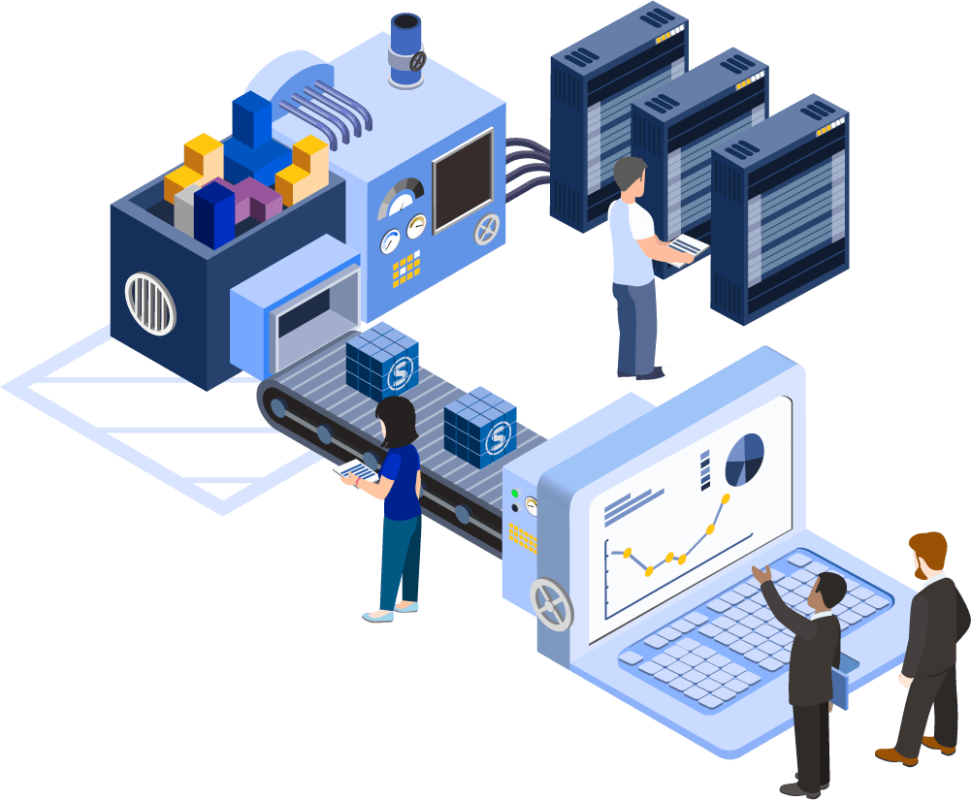

We builds reliable production grade big Data Platforms at scale.

Data is the foundation of everything in your business that is supported by your technology. When you get your data platforms right you open up amazing possibilities. We build, reply and maintain the Bigdata Platform of any scale so that it provides you endless possibilities with it. Big Data Platform refers to IT solutions that combine several Big Data Tools and utilities into one packaged answer, and this is then used further for managing as well as analyzing Big Data. The emphasis on why this is needed is taken care of later in the blog, but know how much data is getting created daily. This Big Data if not maintained well, enterprises are bound to lose out on customers. Let’s get started with the basics.

We build reliable production grade big Data Platforms at scale.

Data engineering is the practice designing and building systems for collecting, storing, and analyzing data at scale. It is a broad eld with applications in justabout every industry. Organizations have the ability to collect massive amounts of data, and they need the right people and technology to ensure the data is in a highly usable state by the time it reaches the data scientists and analysts. A data should be catalogued, establish governance and identify and categorises normal data and master data are important for setting an analysis platform.

We build large scale Data engineering platform through Systems integration, which is all about connecting systems together, automating processes that use data and unlocking the flow of data in your organisation enabling to extract high values insights which drives your business better

1. Receive and catch

Setting up API’s for moving large file and data, store in the blobs of appropriate file system is critical for the data to be valuable. With the help of filters extract real data, the either place in the data lake and database is essential for the pre-processing stage to ensure a good data.

2. Store and categorises

This four stage process consists transforming the data, using the mapping rules, though a canonical data model or using the translating to another format, use OCR to extract textual information, and convert from audio to text are key transformation technique we do. Secondly it has to be filtered using data sanitisation, identify unimportant data, eliminate the noises. Bodies as the third stage, the data to stored in the appropriate storage in the relational models, document, graph, column, key value formats. Finally data has to be categorised taxonomists it, fit is a data models, establish data standards, based on language, catalogue. Then all data access and movement is based on a governance principle.

3. Analyse and predict

We know how important good data is to the success of a project, and thats why we take necessary steps to ensure the highest quality of data cleaning, normalisation, deduplication, labelling and whatever else is required. Additionally, our Skim Engine can handle a lot of the heavy lifting when it comes to text data, pdf or html In this stage we perform various analysis do such as temporal analytics, search for specifics, sentiments, region, ground truth, language prioocessng content detection, content moderation, anomaly detection, facial recognition, and failure analysis. Subsequent to the analysis extract further insights using experiments, statistics analysis capacity planning, failure prediction and security breaches We build large scale Data engineering platform through Systems integration, which is all about connecting systems together, automating processes that use data and unlocking the flow of data in your organisation enabling to extract high values insights which drives your business better.

4. Decide + Act

This is the crucial phase of the data engineering, often data analysis done through the visual analytics, detecting capacity thresholds, failure thresholds, security thresholds, deep insights, workflow, events, alerts, command and control